I recently came across an article from Adam Piskorek about the way Google tests its software.

While I was already familiar with the book How Google Tests Software (by James Whittaker, Jason Arbon et al, 2012), Adam’s article introduced another newer book about how Google approaches software engineering more generally, Software Engineering at Google: Lessons Learned from Programming Over Time (by Titus Winters, Tom Manshreck & Hyrum Wright, 2020).

The following quote in Adam’s article is lifted from this newer book and made me want to dive deeper into the book’s broader content around testing*:

Attempting to assess product quality by asking humans to manually interact with every feature just doesn’t scale. When it comes to testing, there is one clean answer: automation.

Chapter 11 (Testing Overview), p210 (Adam Bender)

I was stunned by this quote from the book. It felt like they were saying that development simply goes too quickly for adequate testing to be performed and also that automation is seen as the silver bullet to moving as fast as they desire while maintaining quality, without those pesky slow humans interacting with the software they’re pushing out.

But, in the interests of fairness, I decided to study the four main chapters of the book devoted to testing to more fully understand how they arrived at the conclusion in this quote – Chapter 11 which offers an overview of the testing approach at Google, chapter 12 devoted to unit testing, chapter 13 on test doubles and chapter 14 on “Larger Testing”. The book is, perhaps unsurprisingly, available to read freely on Google Books.

I didn’t find anything too controversial in chapter 12, rather mostly sensible advice around unit testing. The following quote from this chapter is worth noting, though, as it highlights that “testing” generally means automated checks in their world view:

After preventing bugs, the most important purpose of a test is to improve engineers’ productivity. Compared to broader-scoped tests, unit tests have many properties that make them an excellent way to optimize productivity.

Chapter 13 on test doubles was similarly straightforward, covering the challenges of mocking and giving decent advice around when to opt for faking, stubbing and interaction testing as approaches in this area. Chapter 14 dealt with the challenges of authoring tests of greater scope and I again wasn’t too surprised by what I read there.

It is chapter 11 of this book, Testing Overview (written by Adam Bender), that contains the most interesting content in my opinion and the remainder of this blog post looks in detail at this chapter.

The author says:

since the early 2000s, the software industry’s approach to testing has evolved dramatically to cope with the size and complexity of modern software systems. Central to that evolution has been the practice of developer-driven, automated testing.

I agree that the general industry approach to testing has changed a great deal in the last twenty years. These changes have been driven in part by changes in technology and the ways in which software is delivered to users. They’ve also been driven to some extent by the desire to cut cost and it seems to me that focusing more on automation has been seen (misguidedly) as a way to reduce the overall cost of delivering software solutions. This focus has led to a reduction in the investment in humans to assess what we’re building and I think we all too often experience the results of that reduced level of investment.

Automated testing can prevent bugs from escaping into the wild and affecting your users. The later in the development cycle a bug is caught, the more expensive it is; exponentially so in many cases.

Given the perception of Google as a leader in IT, I was very surprised to see this nonsense about the cost of defects being regurgitated here. This idea is “almost entirely anecdotal” according to Laurent Bossavit in his excellent The Leprechauns of Software Engineering book and he has an entire chapter devoted to this particular mythology. I would imagine that fixing bugs in production for Google is actually inexpensive given the ease with which they can go from code change to delivery into the customer’s hands.

Much ink has been spilled about the subject of testing software, and for good reason: for such an important practice, doing it well still seems to be a mysterious craft to many.

I find the choice of words here particularly interesting, describing testing as “a mysterious craft”. While I think of software testing as a craft, I don’t think it’s mysterious although my experience suggests that it’s very difficult to perform well. I’m not sure whether the wording is a subtle dig at parts of the testing industry in which testing is discussed in terms of it being a craft (e.g. the context-driven testing community) or whether they are genuinely trying to clear up some of the perceived mystery by explaining in some detail how Google approaches testing in this book.

The ability for humans to manually validate every behavior in a system has been unable to keep pace with the explosion of features and platforms in most software. Imagine what it would take to manually test all of the functionality of Google Search, like finding flights, movie times, relevant images, and of course web search results… Even if you can determine how to solve that problem, you then need to multiply that workload by every language, country, and device Google Search must support, and don’t forget to check for things like accessibility and security. Attempting to assess product quality by asking humans to manually interact with every feature just doesn’t scale. When it comes to testing, there is one clear answer: automation

(note: bold emphasis is mine)

We then come to the source of the quote that first piqued my interest. I find it interesting that they seem to be suggesting the need to “test everything” and using that as a justification for saying that using humans to interact with “everything” isn’t scalable. I’d have liked to see some acknowledgement here that the intent is not to attempt to test everything, but rather to make skilled, risk-based judgements about what’s important to test in a particular context for a particular mission (i.e. what are we trying to find out about the system?). The subset of the entire problem space that’s important to us is something we can potentially still ask humans to interact with in valuable ways. The “one clear answer” for testing being “automation” makes little sense to me, given the well-documented shortcomings of automated checks (some of which are acknowledged in this same book) and the different information we should be looking to gather from human interactions with the software compared to that from algorithmic automated checks.

Unlike the QA processes of yore, in which rooms of dedicated software testers pored over new versions of a system, exercising every possible behavior, the engineers who build systems today play an active and integral role in writing and running automated tests for their own code. Even in companies where QA is a prominent organization, developer-written tests are commonplace. At the speed and scale that today’s systems are being developed, the only way to keep up is by sharing the development of tests around the entire engineering staff.

Of course, writing tests is different from writing good tests. It can be quite difficult to train tens of thousands of engineers to write good tests. We will discuss what we have learned about writing good tests in the chapters that follow.

I think it’s great that developers are more involved in testing than they were in the days of yore. Well-written automated checks provide some safety around changing product code and help to prevent a skilled tester from wasting their time on known “broken” builds. But, again, the only discussion that follows in this particular book (as promised in the last sentence above) is about automation and not skilled human testing.

Fast, high-quality releases

With a healthy automated test suite, teams can release new versions of their application with confidence. Many projects at Google release a new version to production every day—even large projects with hundreds of engineers and thousands of code changes submitted every day. This would not be possible without automated testing.

The ability to get code changes to production safely and quickly is appealing and having good automated checks in place can certainly help to increase the safety of doing so. “Confidence” is an interesting choice of word to use around this (and is used frequently in this book), though – the Oxford dictionary definition of “confidence” is “a feeling or belief that one can have faith in or rely on someone or something”, so the “healthy automated test suite” referred to here appears to be one that these engineers feel comfortable to rely on enough to say whether new code should go to production or not.

The other interesting point here is about the need to release new versions so frequently. While it makes sense to have deployment pipelines and systems in place that enable releasing to production to be smooth and uneventful, the desire to push out changes to customers very frequently seems like an end in itself these days. For most testers in most organizations, there is probably no need or desire for such frequent production changes so deciding testing strategy on the perceived need for these frequent changes could lead to goal displacement – and potentially take an important aspect of assessing those changes (viz. human testers) out of the picture altogether.

If test flakiness continues to grows you will experience something much worse than lost productivity: a loss of confidence in the tests. It doesn’t take needing to investigate many flakes before a team loses trust in the test suite, After that happens, engineers will stop reacting to test failures, eliminating any value the test suite provided. Our experience suggests that as you approach 1% flakiness, the tests begin to lose value. At Google, our flaky rate hovers around 0.15%, which implies thousands of flakes every day. We fight hard to keep flakes in check, including actively investing engineering hours to fix them.

It’s good to see this acknowledgement of the issues around automated check stability and the propensity for unstable checks to lead to a collapse in trust in the entire suite. I’m interested to know how they go about categorizing failing checks as “flaky” to be included in their overall 0.15% “flaky rate”, no doubt there’s some additional human effort involved there too.

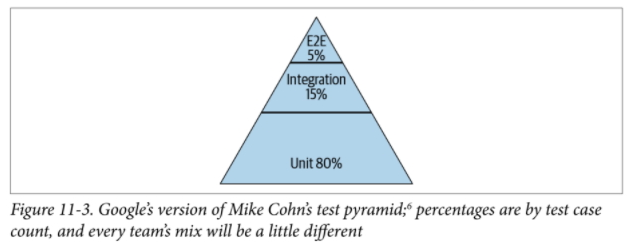

Just as we encourage tests of smaller size, at Google, we also encourage engineers to write tests of narrower scope. As a very rough guideline, we tend to aim to have a mix of around 80% of our tests being narrow-scoped unit tests that validate the majority of our business logic; 15% medium-scoped integration tests that validate the interactions between two or more components; and 5% end-to-end tests that validate the entire system. Figure 11-3 depicts how we can visualize this as a pyramid.

It was inevitable during coverage of automation that some kind of “test pyramid” would make an appearance! In this case, they use the classic Mike Cohn automated test pyramid but I was shocked to see them labelling the three different layers with percentages based on test case count. By their own reasoning, the tests in the different layers are of different scope (that’s why they’re in different layers, right?!) so counting them against each other really makes no sense at all.

Our recommended mix of tests is determined by our two primary goals: engineering productivity and product confidence. Favoring unit tests gives us high confidence quickly, and early in the development process. Larger tests act as sanity checks as the product develops; they should not be viewed as a primary method for catching bugs.

The concept of “confidence” being afforded by particular kinds of checks arises again and it’s also clear that automated checks are viewed as enablers of productivity.

Trying to answer the question “do we have enough tests?” with a single number ignores a lot of context and is unlikely to be useful. Code coverage can provide some insight into untested code, but it is not a substitute for thinking critically about how well your system is tested.

It’s good to see context being mentioned and also the shortcomings of focusing on coverage numbers alone. What I didn’t really find anywhere in what I read in this book was the critical thinking that would lead to an understanding that humans interacting with what’s been built is also a necessary part of assessing whether we’ve got what we wanted. The closest they get to talking about humans experiencing the software in earnest comes from their thoughts around “exploratory testing”:

Exploratory Testing is a fundamentally creative endeavor in which someone treats the application under test as a puzzle to be broken, maybe by executing an unexpected set of steps or by inserting unexpected data. When conducting an exploratory test, the specific problems to be found are unknown at the start. They are gradually uncovered by probing commonly overlooked code paths or unusual responses from the application. As with the detection of security vulnerabilities, as soon as an exploratory test discovers an issue, an automated test should be added to prevent future regressions.

Using automated testing to cover well-understood behaviors enables the expensive and qualitative efforts of human testers to focus on the parts of your products for which they can provide the most value – and avoid boring them to tears in the process.

This description of what exploratory testing is and what it’s best suited to are completely unfamiliar to me, as a practitioner of exploratory testing for fifteen years or so. I don’t treat the software “as a puzzle to be broken” and I’m not even sure what it would mean to do so. It also doesn’t make sense to me to say “the specific problems to be found are unknown at the start”, surely this applies to any type of testing? If we already know what the problems are, we wouldn’t need to test to discover them. My exploratory testing efforts are not focused on “commonly overlooked code paths” either, in fact I’m rarely interested in the code but rather the behaviour of the software experienced by the end user. Given that “exploratory testing” as an approach has been formally defined for such a long time (and refined over that time), it concerns me to see such a different notion being labelled as “exploratory testing” in this book.

TL;DRs

Automated testing is foundational to enabling software to change.

For tests to scale, they must be automated.

A balanced test suite is necessary for maintaining healthy test coverage.

“If you liked it, you should have put a test on it.”

Changing the testing culture in organizations takes time.

In wrapping up chapter 11 of the book, the focus is again on automated checks with essentially no mention of human testing. The scaling issue is highlighted here also, but thinking solely in terms of scale is missing the point, I think.

The chapters of this book devoted to ‘testing” in some way cover a lot of ground, but the vast majority of that journey is devoted to automated checks of various kinds. Given Google’s reputation and perceived leadership status in IT, I was really surprised to see mention of the “cost of change curve” and the test automation pyramid, but not surprised by the lack of focus on human exploratory testing.

Circling back to that triggering quote I saw in Adam’s blog (“Attempting to assess product quality by asking humans to manually interact with every feature just doesn’t scale”), I didn’t find an explanation of how they do in fact assess product quality – at least in the chapters I read. I was encouraged that they used the term “assess” rather than “measure” when talking about quality (on which James Bach wrote the excellent blog post, Assess Quality, Don’t Measure It), but I only read about their various approaches to using automated checks to build “confidence”, etc. rather than how they actually assess the quality of what they’re building.

I think it’s also important to consider your own context before taking Google’s ideas as a model for your own organization. The vast majority of testers don’t operate in organizations of Google’s scale and so don’t need to copy their solutions to these scaling problems. It seems we’re very fond of taking models, processes, methodologies, etc. from one organization and trying to copy the practices in an entirely different one (the widespread adoption of the so-called “Spotify model” is a perfect example of this problem).

Context is incredibly important and, in this particular case, I’d encourage anyone reading about Google’s approach to testing to be mindful of how different their scale is and not use the argument from the original quote that inspired this post to argue against the need for humans to assess the quality of the software we build.

* It would be remiss of me not to mention a brilliant response to this same quote from Michael Bolton – in the form of his 47-part Twitter thread (yes, 47!).